AI in Cyber 2024: Is the Cybersecurity Profession Ready?, a survey of ISC2 members revealed that 88% are already seeing AI impact their existing roles, with most seeing positives in the form of improved efficiency despite concerns over redundancy of human tasks.

After decades of being a construct of science fiction, artificial intelligence (AI), or at least advanced machine learning (ML) has made the leap from fiction to reality. As a concept and technology, it has enjoyed an exceptional acceleration in development and capability in the last decade.

That progress has particularly manifested itself in the form of public-facing large language models (LLMs) in the form of ChatGPT, Google PaLM and Gemini, Meta’s LLaMA and more, all of which are forms of extensive generative AI that can be leveraged to do almost anything from creating a document on historic baseball wins to correctly writing and targeting a phishing email in the language of a criminal’s choosing. AI is everywhere and while the cybersecurity industry was quick to adopt AI and ML as part of its latest generation of defensive and monitoring technologies, so too have the bad actors, who are leaning on the same technology to elevate the sophistication, speed and accuracy of their own cybercrime activities.

ISC2 conducted a survey titled “ AI in Cyber 2024: Is the Cybersecurity Profession Ready?” of 1,123 members who work, or have worked, in roles with security responsibilities to understand the realities of how AI is impacting everyday cybersecurity roles and tasks, as opposed to the perception of how its use intersects with the roles of professionals.

It’s Already Here

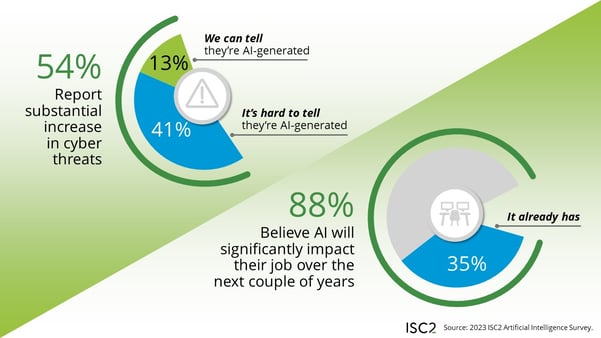

A combined 54% of respondents note that there has been a substantial increase in cyber threats over the last six months. That’s made up of 13% who are confident they can directly link that to AI-generated threats, and 41% who are unable to make a definitive connection. Arguably, if an AI LLM is working well, you won’t know the difference between automated and human-based attacks. The clues will be more nuanced, such as speed and repetition of attack that appear implausibly fast for a human (or a room full of humans) to conduct.

Furthermore, 35% of those surveyed stated that AI is already impacting their daily job function. This is not a positive of negative measure, just recognition of the fact AI plays a role. Be that dealing with AI driven attacks, or working with AI-based tools such as automated monitoring applications and AI-driven heuristic scanning. Add to the above those who believe that AI will impact their job in the near future, and we see that more than eight in 10 (88%) expect AI to significantly impact their jobs over the next couple of years.

AI is Perceived as a Positive

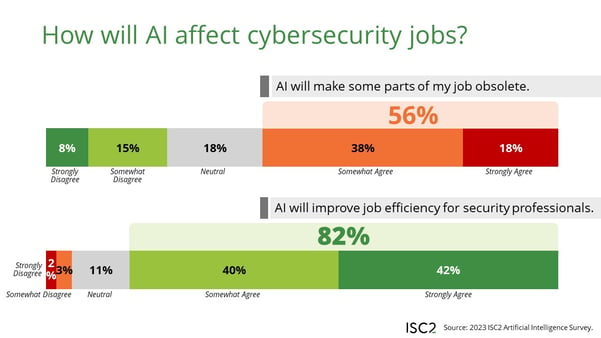

How will that impact actually manifest as positive of negative input or interference? The survey respondents are highly positive about the potential for AI. Overall, 82% agree that AI will improve job efficiency for them as cybersecurity professionals. That is countered by 56% also noting that AI will make some parts of their job obsolete. Again, the obsolescence of job functions isn’t necessarily a negative, but rather noting the evolving nature of the role of people in cybersecurity in the face of rapidly evolving and autonomous software solutions, particularly those charged with carrying out repetitive and time-consuming cybersecurity tasks.

When we asked respondents which job roles are being impacted by AI and ML, we quickly see it’s the time consuming and lower-value functions – precisely the area where organizations would rather not have skilled people tied up. For example:

- 81% noted the scope for AI and ML to support analyzing user behavior patterns

- This was followed by 75% who mentioned automating repetitive tasks

- 71% who see it being used to monitor network traffic for signs of malware

- A joint 62% who see it being used for predicting areas of weakness in the IT estate (also known as testing the fences) as well as automatically detecting and blocking threats

These functions all point to efficiencies over reductions in cybersecurity roles. With a global cybersecurity workforce of 5,452,732 according to the latest ISC2 Cybersecurity Workforce Study , combined with a global workforce gap of 3,999,964, it is unlikely that AI is going to make major inroads into closing the supply and demand divide, but it will play a meaningful role in allowing the 5,452,732 to focus on more complex, high value and critical tasks, perhaps alleviating some of the workforce pressure.

AI as a Threat

Aside from how AI has become a key tool for the cybersecurity industry itself, we must not overlook the role it is playing within the criminal community to support attack and other malicious activities. Three quarters of those surveyed were concerned that AI is, or will be used, as a means for launching cyberattacks and other malicious criminal acts.

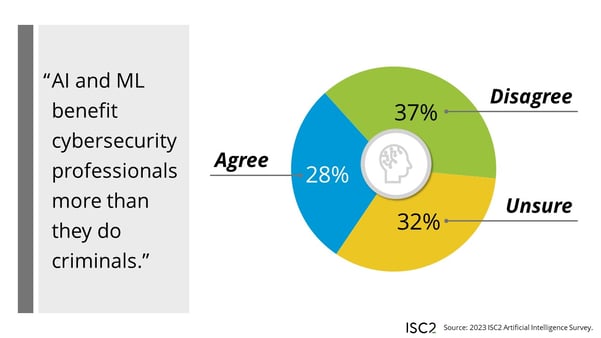

With an appreciation that the technology is already serving both sides, it was noted that right now, the currently level of AI and ML maturity is still more likely to benefit cybersecurity professionals than it will help the criminals. But it is a slim margin. Only 28% agreed, while 37% disagreed, leaving nearly a third (32%) still unsure whether AI is more of a help to cybersecurity than a hindrance.

What are the threats being launched off the back of AI technology? We asked respondents to list the ones that most concern them.

There was a very clear bias towards misinformation-based attacks and activities at the top of the list:

- Deepfakes (76%)

- Disinformation campaigns (70%)

- Social engineering (64%)

This year is set to be one of the biggest for democratic elections in history, with leadership elections taking place in seven of the world's 10 most populous nations:

- Bangladesh

- India

- U.S.

- Indonesia

- Pakistan

- Russia

- Mexico

Alongside these, major elections are taking place in key economic and resource-rich nations and blocs including the U.K., E.U., Iceland, South Africa, South Korea, Azerbaijan, Republic of Ireland and Venezuela.

These are alongside regional and mayoral elections that in total will see 73 countries (plus all 27 members of the European Union) go to the polls at least once in 2024. The UK will be the busiest at the ballot box, with 11 elections in total. There will be 10 major regional elections, plus a general election for a new government and Prime Minister in 2024.

The fact that cybersecurity professionals are pointing to these types of information and deception attacks as the biggest concern is understandably a great worry for organizations, governments and citizens alike in this highly political year.

Other key AI-driven concerns are not attack based, but more regulatory and best-practice drive. These include:

- The current lack of regulation (59%)

- Ethical concerns (57%)

- Privacy invasion (55%)

- The risk of data poisoning – intentional or accidental – (52%)

Attack-based concerns do start to re-emerge further down the list of responses, with adversarial attacks (47%), IP theft (41%) and unauthorized access (35%) all noted by respondents as a concern.

Strangely, at the bottom of the list was enhanced threat detection. 16% of respondents are concerned that AI-based systems might be too good at what they do, potential creating a myriad of issues for human operators including false positives or overstepping into user privacy.

How Can We Be Better Prepared?

By their own admission, survey respondents noted there’s room for improvement, but the preparedness for an influx of AI technology and AI-based threats is not all negative:

- Some 60% say they could confidently lead the rollout of AI in their organization

- Meanwhile, only a quarter (26%) said they were not prepared to deal with AI-driven security issues

- More concerning was that four in 10 (41%) said they have little or no experience in AI or ML, while a fifth (21%) said they don’t know enough about AI to mitigate concerns

The conclusion is clear – education on AI use, best practice and efficiency is a crucial requirement for all organizations today.

Creating Effective AI Policy

The fact that, despite its recent acceleration in technological capability and advancement, AI and ML are both still in their technology infancy. This is brought into stark contrast by the lack of organizational policy (as well as government regulation) regarding the acquisition, use, access to data and trust placed in AI technology:

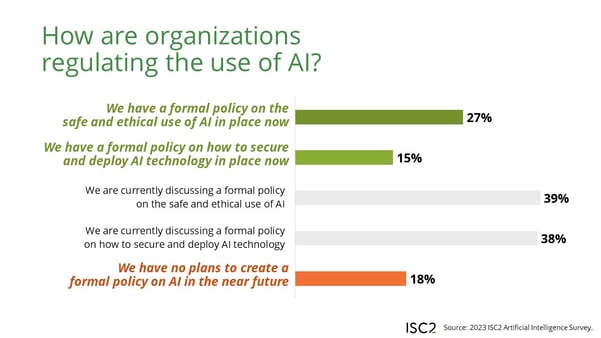

- Of the cybersecurity professionals we surveyed, only a quarter (27%) said their organizations had a formal policy in place on the safe and ethical use of AI

- Only 15% said they had a policy that covered securing and deploying AI technology today

- On the other side of the two questions, we find nearly 40% of respondents are still trying to develop a policy and a position. That’s 39% on ethics and 38% on safe and secure deployment

- Meanwhile, almost a fifth (18%) said their organizations have no plans to create a formal policy on AI in the near future

- Nearly one in five organizations surveyed are not ready for or preparing for AI technology either in or interfacing with their operations

Arguably, this is a cause for concern particularly given that government regulation in many major economies has yet to catch up with the use of AI technology. That said, four out of five respondents do see a clear need for comprehensive and specific regulations governing the safe and ethical use of AI:

- Respondents were clear that governments need to take more of a lead if organizational policy is to catch up, even though 72% agreed that different types of AI will need their own tailored regulations

- Regardless, 63% said regulation of AI should come from collaborative government efforts (ensuring standardization across borders) and 54% wanting national governments to take the lead

- In addition, 61% would also like to see AI experts coming together to support the regulation effort, while 28% favor private sector self-regulation

- Only 3% want to retain the current unregulated environment

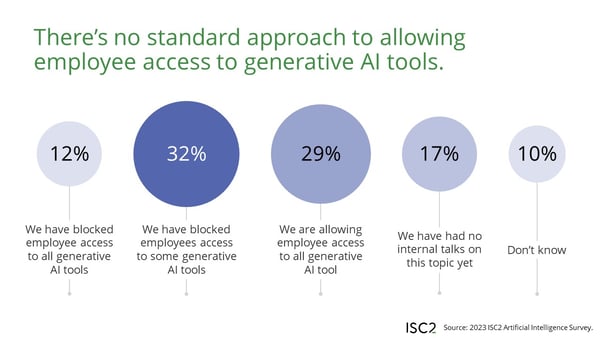

Given the lack of policy and regulation, how are organizations governing who should have access to AI technology? The fact is there is no common approach:

- The survey revealed that 12% of respondents said their organizations had blocked all access to generative AI tools in the workplace. So, no using ChatGPT to write that difficult customer letter any time soon!

- However, 17% are not even discussing the issue, while around a third each are allowing access to some (32%) or all (29%) generative AI tools in the workplace

- A further 10% simply don’t know what their organization is doing in this regard

What Does This Mean for You?

Ultimately, this study and the feedback of ISC2 members working on the front line of cybersecurity highlights that we are in an environment where threats are rising, at least partly due to the use of AI, at a time when the workforce is struggling to grow to meet the demand.

There are no standards on how organizations are approaching internal AI regulation, with little government-driven legal regulation at this stage. Cybersecurity professionals want meaningful regulations governing the safe and ethical use of AI to serve as a catalyst for formalizing operational policy.

There is no doubt that AI is going to change and redefine many cybersecurity roles, removing people from certain high-speed and repetitive tasks, but is less likely to eliminate human cybersecurity jobs completely. However, as the technology is still in its infancy, education is paramount. Education will play a critical role in ensuring that today’s and tomorrow’s cybersecurity professionals are able to adapt and fully utilize the efficiency and operational benefits of AI technology, maintain clear ethical boundaries as well as respond to AI-driven threats. Seeking out education opportunities is essential for cybersecurity professionals to continue playing a leading role in the evolution of AI in the sector.

- Register for our upcoming webinar on this research “AI in Cyber: Are We Ready?”

- ISC2 is holding a series of global strategic and operational AI workshops. Find one near you

- Sign up for our webinar on “Five Ways AI Improves Cybersecurity Defenses Today”

- Replay our two-part webinar series on the impact of AI on the cybersecurity industry: Part 1 and Part 2

.jpg?h=700&iar=0&w=700)