Digital trust is a major consideration for all cybersecurity professionals today, as well as for all users expecting safe and secure digital interactions. As Mohamed Mahdy, ISSAP, CISSP, SSCP explains, the rise of Generative AI has had a fundamental impact on how we use and trust digital identity management.

Generative AI (Gen-AI) has become a buzzword in the IT industry,

representing a groundbreaking shift in how we use and interact with

artificial intelligence (AI). However, one critical aspect often overlooked

in this new direction is identity management. Gen-AI has demonstrated

significant potential for identity-related use cases, transforming how we

approach access security. It's no longer just about authenticating and

authorizing users to access data; the focus is shifting towards the adoption

of machine identities and advanced authentication/authorization technologies

that can keep pace with the rapid flow of data.

Generative AI (Gen-AI) has become a buzzword in the IT industry,

representing a groundbreaking shift in how we use and interact with

artificial intelligence (AI). However, one critical aspect often overlooked

in this new direction is identity management. Gen-AI has demonstrated

significant potential for identity-related use cases, transforming how we

approach access security. It's no longer just about authenticating and

authorizing users to access data; the focus is shifting towards the adoption

of machine identities and advanced authentication/authorization technologies

that can keep pace with the rapid flow of data.

While ensuring secure and controlled access remains crucial, it is equally important that this access acts as an enabler rather than a gatekeeper. By examining the intersection of identity management and Gen-AI, we aim to highlight how robust identity solutions can facilitate seamless integration and utilization of AI technologies, driving innovation and safeguarding against potential threats.

Engaging with AI

While testing the features of an AI-powered chatbot recently, I asked what I thought was a simple question. And the ‘conversation’ went like this:

User: I need the solution for [xyz problem].

AI-Chat: Sorry, I can’t provide this. The answer contains sensitive material.

Looking at this, some challenges became apparent:

- Business Impact - The user’s work has been interrupted and AI is not really helping the user. The introduction of AI has caused business to be slower than expected

- Security Impact - The user may try different approaches to manipulate the AI and get his answer. This may involve seeking backdoors or hacking their way around the AI engine to retrieve the answers requested

- Security and Compliance Impact - The user may unintentionally (or intentionally) cause the large language model (LLM) or input streams to behave maliciously. The system may attempt to use data flows that aren’t allowed - for example, processing data across different regions, causing compliance issues

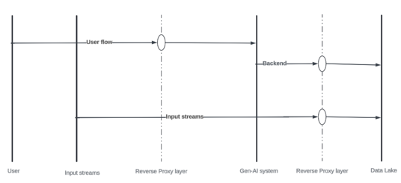

Let’s consider the ecosystem. First, there are three main contributors to the data:

- The data owner, who assigns the sensitivity and decides who can access it

- The Gen-AI user who, in our case, is asking questions of the chatbot and expecting answers

- The Gen-AI system, which includes but is not limited to workloads, LLM engine and Input streams (IOT, search engines, etc.)

Second, we have three main flows:

- The user initiates a request

- The backend systems receive the request and process the already-obtained data

- The workloads interact with each other or with input streams to generate more data

Identity is No Longer Straightforward

Identity has always been a fundamental concept in information security. It refers to a set of attributes and characteristics that are used to distinguish a user or entity. Looking into the flows defined above, we can observe how challenging identities can be. We have both machines and users, each with different access controls requirements.

How can we provide identification, authentication, authorization, and accountability? We must consider that the speed required to handle the access controls requirements differ between users and machines. And, as distributed as the systems are, there’s a need to incorporate third party feeds into the access control process to make sure endpoints are healthy and compliant all the time. As we are talking about huge volumes of data, a malicious actor could, in just a few seconds, have a major impact on the LLM logic and the expected output.

Data Processing and Labeling

At the time of data collection and processing by the LLM

engines, we need to have labels in place. Each data item needs to be

properly labeled and to have access rules in place. For example: data

may be gathered from the Finance department on the condition that it may

only be accessed subsequently by employees from the [same] Finance and

HR departments.

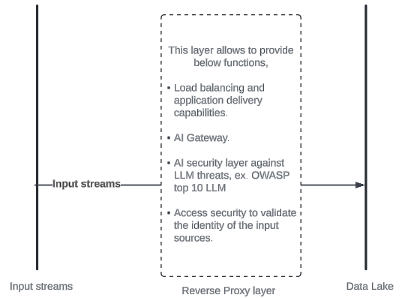

In the case of data being ingested by input streams (for example, monitoring cameras or smart devices), each device is authenticated (i.e., the machine identity) prior to data being delivered to the data lake for the LLM engine to start its work. Also, the access solution would continue to monitor and get feeds from third party systems to block any malicious machine.

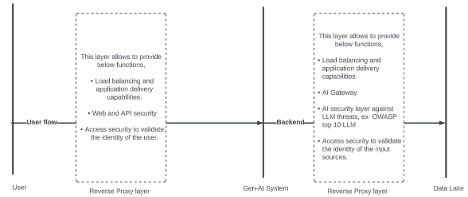

Data access

In this phase, we must apply the access rules to the data returned to the user by the Gen-AI engine. So, it would be appropriate to apply Single Sign-On and MFA technologies to the access solution. This access solution would then be able to fetch the identity store attributes and enforce the rules to the passing traffic.

Persisting with the previous example of the financial data: once a specific data item is identified by Gen-AI engine as requiring extra checks:he Gen-AI system would send back, for example, a 401 code (or a query request for specific user attributes), requesting extra authentication/authorization.

The in-line access solution would perform SSO (or federation) based on the applied rules.

The access solution would then fetch the attributes and compare it with the received flows to allow or deny that flow.

Identity is Evolving, So Are Access Solutions

On the basis of what we've explored here, we can see the new requirements for, and capabilities needed by, access solutions. These include the ability to:

- Communicate and work with different identity providers. Not all organizations upgrade their identity stores as fast as new business use cases and applications (Gen-AI related ones) are adopted. So, we need a solution that can talk with modern applications and still communicate with legacy ones

- Adapt to technology requirements - for example, Multi-Factor Authentication (MFA) and Single Sign-On (SSO)

- Support different types of authentications that can work with both users and machines

- Integrate with third-party systems, to provide insights on End User Behavior and Analytics (EUBA) that provide continuous monitoring abilities

- Be deployed in a distributed manner while providing global visibility and control over deployed instances

Access Solutions as an Enabler

Why do we try to make things easier? Why don’t we just block the access requests? The answer is two-fold: for reasons of accessibility and efficiency. Both of these help drive users towards adopting security measures in place to secure AI related work.

In a study published by the National Science Foundation (NSF), 67% of employees were found to have been unsuccessful in staying fully compliant with security policies at least once. When asked why they had not been compliant, around 85% of the answers include:

- To perform the job more effectively

- To accomplish something I needed

- To assist others

A final interesting statistic: 3% of total breaches were initiated by malicious intent. Having security controls in place not only makes the employee’s job easier but, at the same time, provides crucial security that could prevent a significant volume of attacks.

Mohamed Mahdy, SSCP, CISSP, ISSAP, has 11 years of experience in IT & Cybersecurity in Service Providers, Defense, Governmental, Financial and others. Mohamed has held support, consulting and Product Management roles, with responsibility for implementing, supporting, solutions’ design, architect and technical marketing activities for Network and Application security solutions.