As organizations increasingly rely on machine learning for critical decision-making, ensuring the security of ML systems becomes paramount. MLSecOps is a vital discipline that integrates SecOps with ML operations to protect models, data and the ML infrastructure.

One of the more enjoyable elements of the IT and cybersecurity sectors is

that just when you thought it had enough abbreviations, another one leaps

out. Among the latest is MLSecOps,

defined as: “the integration of security practices and considerations into the

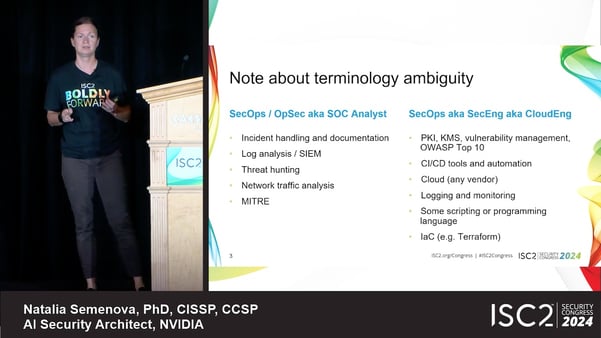

[machine learning] development and deployment process”. Natalia Semenova,

PhD, CISSP,

CCSP, AI security architect for Nvidia, presented at ISC Security Congress 2024

in Las Vegas,

Jack

of More Trades: Introduction to MLSecOps for DevSecOps Professionals, with the specific aim of helping those already familiar

with DevOps, perhaps even DevSecOps, to apply their understanding in the

field of ML. A live survey during the session showed a mere 14% already have

it in their business, with almost 50% saying they had no current plans to

introduce it either, and so a bit of exposure and explanation of the concept

was appropriate.

One of the more enjoyable elements of the IT and cybersecurity sectors is

that just when you thought it had enough abbreviations, another one leaps

out. Among the latest is MLSecOps,

defined as: “the integration of security practices and considerations into the

[machine learning] development and deployment process”. Natalia Semenova,

PhD, CISSP,

CCSP, AI security architect for Nvidia, presented at ISC Security Congress 2024

in Las Vegas,

Jack

of More Trades: Introduction to MLSecOps for DevSecOps Professionals, with the specific aim of helping those already familiar

with DevOps, perhaps even DevSecOps, to apply their understanding in the

field of ML. A live survey during the session showed a mere 14% already have

it in their business, with almost 50% saying they had no current plans to

introduce it either, and so a bit of exposure and explanation of the concept

was appropriate.

It's no surprise that a talk with ML in the title was presented by someone from Nvidia. Anyone looking to do ML will reach for hardware that is heavy on Graphics Processing Units (GPUs), since 15 or so years ago it was discovered – almost serendipitously – that they are incredibly well-suited to executing artificial intelligence (AI) and ML algorithms. This said, though, Semenova correctly predicted that another live survey of those in the audience with plans to look at ML would do so in the cloud: “If you are just planning, or you already implemented, where do you want to implement your pipelines?” she asked. “On premise or in the cloud? I expect the cloud to win, because right now, the hardware that supports machine learning is quite expensive, and if you are just trying, of course, it doesn't make much sense to invest in your own hardware unless you are a vendor”. (In the straw poll in the room, cloud beat on-premise by a factor of about two and a half).

MLSecOps and What it Means

When you read the definition earlier, did you really take in what it was talking about? It’s not about applying ML to SecOps, but the precise opposite: looking at our ML ops world and introducing SecOps so that we can make the ML world more secure. In Semenova’s words, MLSecOps “integrates SecOps with ML operations to protect models, data and the ML infrastructure”. This is why massive knowledge of ML isn’t vastly necessary at a high level: we are taking what we know about SecOps and applying it to an area of tech that we might not know, but about which we can fairly easily get to grips with the basics, so we at least comprehend where the SecOps elements need to intervene.

All the components we add to the ML operations world are similar to any other SecOps exercise: Identity and Access Management (IAM), threat modelling, policy compliance, Software Bill of Materials (BOM – or as we now know it – ML-BOM), digital signing, rate limiting, data security, … nothing is a surprise. If we look back at when SecOps became a thing, what we introduce with MLSecOps is precisely the same, just with a different tech in the ops segment.

And this is where we stop using words like “simple” and “straightforward”, because the complexity is of course in the low-level doing. Semenova gave the example of security a Jupyter notebook in an ML setup: most of us have never had to do it in any kind of setup, so understanding how such things work and how to secure them is a learning curve to scramble up. We can identify similarities with things we already know – in fact Semenova noted that if you know how to secure containers then you’re part-way there for her Jupyter example – but at some point we will hit something we are not used to.

Validating MLSecOps

One example arrived at in the presentation looked at the same issue that we often find with auditing ML- or AI-based systems: output validation. The nature of ML is that we are not using a static algorithm to take a set of inputs and compute an output: instead, we cannot definitively predict the output that will result of any specific inputs. But Semenova also cited a side issue that is easy to forget: when an ML algorithm runs over a given data set, it runs over that entire data set: “One example, let's say you have a chat bot that would advise employees on their benefits. So everybody can ask this bot: how much would I earn the next month? So, based on the category, on bonuses, on productivity, the bot may be able to provide this information. But what prevents employee from asking, how much would the CIO earn next month? Basically nothing if you don't put controls. As this bot knows everything about everyone, they would just provide this output unfiltered”. This is something we could control with, say, row-based permissions in a relational database, but how to do it in an ML world is a whole new concept for us in SecOps.

So, then, new concepts abound as we try to apply our SecOps techniques to ML, but as we have already hinted they are often developments of things we know. We mentioned earlier that the SBOM has become an ML-BOM: well, the SBOM is a detailed breakdown of the software components in a system, while the ML-BOM is an enumeration of the AI technologies in an ML system. And sure enough, the similarities and overlaps are plenty – Semenova confirmed that: “MLSecOps has the same stages identified as DevSecOps. It has the plan build stage … then you have test deploy phase, again pretty much similar to DevSecOps … and then finally, you have the operate monitor phase”.

Adoption Timeline

How long will it take those in SecOps or DevSecOps to become fully accomplished MLSecOps specialists? Well, how long do you want to take? Scratching the surface can take us a few days or weeks to get fundamentally comfortable with enough concepts to allow us to say: I feel like I know about this. At the other extreme, Semenova walked through a potential six-month period of learning, beginning with fairly simple elements such as the OWASP LLM Top 10 or AI-assisted code development tools such as CodeWhisperer and working up to deep knowledge of frameworks such as MITRE ATLAS and standards such as ISO 42001 and ISO 23053.

In short, then: most people with SecOps knowledge have little or no in-depth knowledge of ML – how it works and particularly how it is developed and operated. But all is not lost, because in most SecOps scenarios we find ourselves working with projects, systems and technologies whose intricacies we do not understand greatly – because it’s the project or technical team whose job it is to know that stuff. Yet we will all recall instances where we began with little or no knowledge and then before long found ourselves understanding the details pretty well.

And as a parting shot, remember that MLSecOps is very much in its infancy – in fact many people attending Congress and/or reading this had never even heard of it. Getting to grips with it now may well put us ahead of most of our peers and competitors, and may make our skills saleable in the job market!

- Register now for ISC2 Security Congress 2025 in Nashville

- The CSSLP certification is a proven way to build your career and better incorporate security practices into each phase of the software development lifecycle

- The Ultimate Guide to the CSSLP covers everything you need to know about the secure software development professional’s certification